China pushes into the AI market with open source, as a higher performance floor fuels a race to curb hallucinations

Input

Modified

China’s AI sector leans on open-source models to boost access and flexibility “Always guessing, even when unsure”: hallucinations remain a stubborn flaw As performance converges, trust goes to firms that rein in AI hallucinations

China is increasingly seen as having sharply narrowed the gap with the United States in generative AI. By leaning on open-source models, it is pushing past technical constraints, tapping into global demand, and rapidly expanding its footprint. Experts say this approach could reshape the competitive landscape of the global AI market.

The U.S.–China AI gap continues to narrow

On the 14th (local time), the South China Morning Post reported that the AI technology gap between China and the United States—once estimated at two to three years—has now shrunk to roughly three months. The inflow of AI talent and the adoption of open-source models that disclose source code are cited as key factors behind China’s rapid gains. Similar assessments have previously been offered by Hong Kong–based investment bank CLSA and U.S. AI research group Epoch AI.

China is also consolidating its lead in open-source AI models. While U.S. tech companies such as OpenAI, Anthropic, and Google have pursued closed strategies—keeping proprietary models behind paywalls—Chinese firms including DeepSeek, Alibaba’s Qwen, and Moonshot’s Kimi have made their model code publicly available for anyone to download and adapt. The approach is widely seen as a bid to spread Chinese AI models as broadly as possible and take control of the AI ecosystem. Even without a clear technological edge over U.S. rivals, Chinese players are now pushing into global markets by emphasizing accessibility and flexibility.

Performance among open-source models has improved markedly as well. According to an analysis released last month by AI benchmarking firm Artificial Analysis, “M2,” launched in October by Chinese startup MiniMax, ranked first worldwide in overall open-source AI performance. Built around a low-cost, high-efficiency strategy, the model uses a “mixture of experts” approach that activates only the parameters needed for a given task, sharply boosting computational efficiency and response speed. In total, eight China-developed models—including those from DeepSeek and Alibaba’s Qwen—made the global top 10. The only non-Chinese entries were two models from U.S.-based OpenAI, released under a “partially open” framework, ranking second and ninth.

Pricing is another advantage. Many Chinese AI models are offered at less than half the cost of U.S. competitors, accelerating their uptake in the market. A report published last month by MIT and open-source AI platform Hugging Face showed that Chinese models accounted for 17% of all new open-model downloads on Hugging Face over the past year, surpassing the combined 15.8% share of models developed by U.S. firms such as Google, Meta, and OpenAI. It marked the first time Chinese models had overtaken their U.S. counterparts on that metric.

A chronic challenge for AI: hallucinations

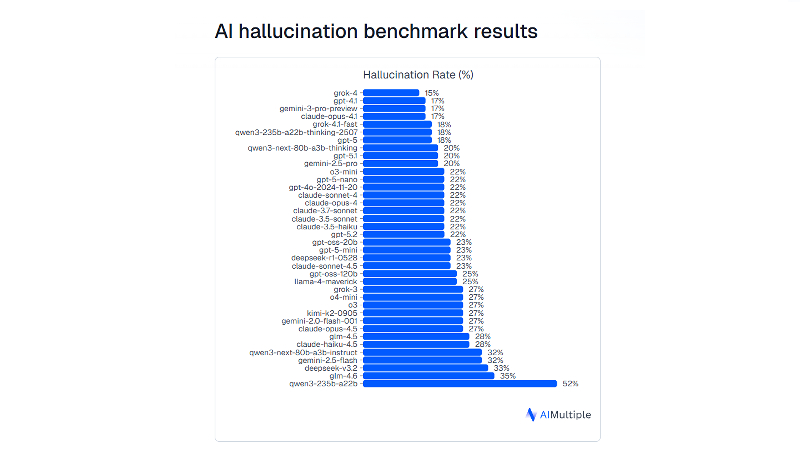

The rise of open-source models could reshape competition across the AI market. As open-source adoption becomes the norm, the performance floor rises, and most companies end up with broadly similar baseline capabilities in reasoning and generation. That means the ability to build a model entirely in-house is less likely to remain a defining edge. In that environment, competitive advantage may shift toward factors such as the volume, quality, and structure of proprietary data—and how often a model hallucinates.

Hallucinations refer to cases where AI-generated outputs include false or fabricated information, and they have been a persistent problem since the LLM market took off. According to a research paper OpenAI released in September, a root cause is that the way language models are trained and evaluated tends to reward guessing rather than admitting uncertainty. Under common evaluation setups, correct answers earn points, while responding “I don’t know” earns zero—pushing models to guess even when they are unsure.

Language models learn through next-word prediction on massive text datasets. They can pick up consistent patterns such as spelling and grammar, but struggle with rare, arbitrary facts—like an individual’s birthday. This is how factual errors emerge. For example, if asked for someone’s birthday, a model gets zero credit for saying “I don’t know,” but might score by guessing a date such as “September 10,” even if the odds are low. In other words, the structure incentivizes confident guesses, which can lead to misinformation.

Could hallucinations become the new front line in the AI race?

Hallucinations are reportedly more severe in specialized domains. According to the Institute of Information & Communications Technology Planning & Evaluation, major AI models showed a hallucination-detection accuracy of 85% for “general knowledge,” but only around the 50%–60% range in expert fields such as law (64%), medicine (58%), and psychology (53%). Here, detection accuracy refers to the share of cases in which an AI model correctly identifies which parts of its own output are hallucinations. Overseas research points to a similar picture. A study by the global AI think tank All About AI found hallucination rates of 15.6% in medical information and 18.7% in legal information.

Real-world fallout is also piling up. Michael Cohen, once known as U.S. President Donald Trump’s “fixer,” was caught after submitting fake case citations to a court in 2023 that he had generated using Google’s AI chatbot Bard. Last year, Air Canada was sued—and ordered to compensate a customer—after its AI chatbot provided incorrect information about discounts. In Korea, during the Jeju Air passenger-plane tragedy late last year, ChatGPT produced a hallucinated claim that the number “817” shown on a broadcaster’s screen was linked to North Korean operations, helping fuel conspiracy theories.

Experts say the companies that succeed in minimizing hallucinations will gain the upper hand. One market specialist noted that as open-source AI influence—particularly from China—has grown, model performance has converged and algorithm-level breakthroughs have become harder to come by, meaning the era when technical superiority alone guaranteed competitiveness is fading. He added that rather than spending heavily to build proprietary LLMs, AI companies should prioritize building systems that reduce hallucinations and strengthen consumer trust.